We’re only now starting to see serious cracks in three years’ worth of AI hype. The level of investment in generative AI has been so huge that seven AI companies currently account for more than a third of the US stock market (re. Cory Doctorow). But there have rarely been more signs that the “bigger is better” type of AI will falter and crash in 2026…

1. It will run out of electricity

“I think the limiting factor for AI deployment is fundamentally electrical power. It’s clear that very soon – maybe even later this year – we’ll be producing more chips than we can turn on“, said no other than Elon Musk at Davos yesterday (via Fortune). This is the man who is currently powering the world’s biggest AI supercomputer with 35 methane generators because he knew the local grid wouldn’t give him that much power quickly enough (methane generators which the EPA has just confirmed are illegal, btw).

Musk’s comment also confirms what we started reporting last November: that several major data centres in the US are currently sitting idle due to lack of electricity.

In other words, as we’ve been saying since the get go, “big AI” is literally unsustainable, and that’s just talking from an electricity point of view (not to mention all the other environmental impacts).

This would not be the case without the sector’s dominant – yet totally unecessary – “bigger is better” obsession. LLMs with around 10 billion parametres are perfectly capable of meeting most people’s needs, namely writing emails, or resuming long texts. Whereas GPT5, the model behind ChatGPT, is estimated to have close to 2 trillion. I.e. 800 million people per week are using a model that’s 200 times too big. Ergo, AI is currently consuming way too much energy (and water, and all the other impacts) than it should, based on what we actually need it to do.

So smaller models could solve this problem; as could solar power, which Musk himself admits is the reason China doesn’t have an AI electricity issue…

2. The growth ≠ the hype

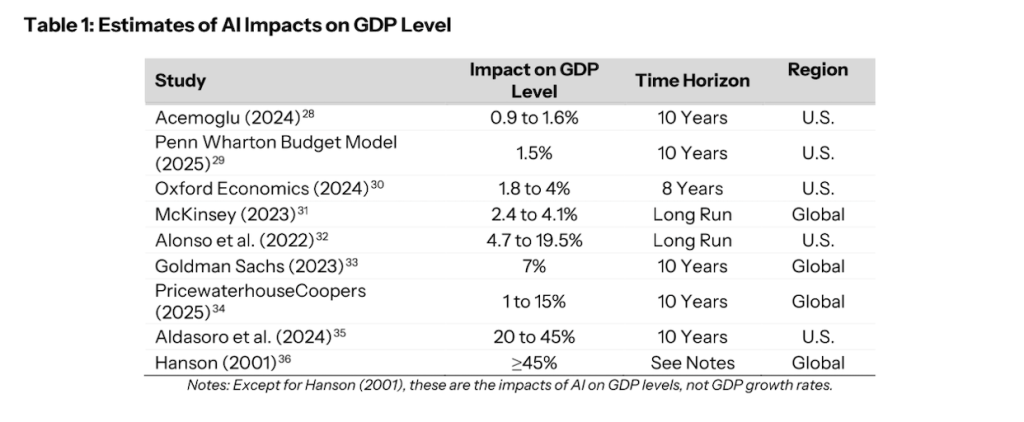

Whereas Microsoft has claimed that AI will boost productivity by around 40%, not many others agree. This was confirmed by latest report by the US’ Council of Economic Advisers, which compiled research on a similar-but-different KPI: AI’s impact on GDP levels (above). As we can see, most estimates hover below 5%; a few at 15-20%; and a minority at 40%/Microsoft levels.

Not that many C-levels, or indeed consultants, are listening: BCG maddeningly predicted in its latest AI Radar that 94% of CEOs will continue investing in AI in 2026, even if it delivers no ROI. This is almost the same figure MIT Nanda infamously reported as the share of AI projects currently showing no ROI: 95%. Confirming that AI hype remains so strong, you can still say one thing and its opposite in the same sentence.

And yet even banks are now throwing shade on AI hype. “For most people, this feels less like changing from a horse to a tractor than getting a more comfortable saddle,” said a recent note from Deutsche Bank analysts, which predicts that the “honeymoon” will be over in 2026. The note also illustrates how usage of open source models, including Chinese ones, has been gaining significant ground of late: more bad news for our US tech billionaires.

“For this not to be a bubble by definition, it requires that the benefits of this are much more evenly spread”, US tech billionaire Satya Nadella, the boss of Microsoft, said at Davos. In other words: it’s a bubble, and if it pops, it’s because it hasn’t been widely adopted enough, reported the Irish Times. In other words, it’ll be our fault…

But perhaps one of my favourite hype climbdowns came before Davos, when DELL, the world’s third largest computer maker, admitted that consumers are not buying PCs “based on AI. In fact, I think AI probably confuses them more than it helps them,” according to Kevin Terwilliger, Dell head of product, via PC Gamer. This despite his boss claiming just over a year ago that the coming of AI is as important as the coming of the internet.

3. It’s financially unsustainable

The one figure that has stuck in my head since I read Bain & Company’s ominous report: $2 trillion. Per year. That’s the amount of revenue AI will need to generate by 2030, to pay off the insane levels of investment currently being poured into data centres, chips and the like. And yet, re. same report, it’s ‘only’ on track to generate $800 billion. So we’re looking at a 60% shortfall. I’m no economist, but that doesn’t add up.

Enough has been written about the “patronage network, or crony capitalism” (Sarah Myers West, AI Now Institude) that is big AI finance, where, for example, NVIDIA gives OpenAI $100bn so it can buy its GPUs. To noone’s benefit other than to that handful of billionaire-run companies. I also call this “human centipede capitalism.” But let’s move quickly on from that grisly image.

The absurdity of these economics is why my compatriot Ed Zitron recently wrote 19,000 words on “The Enshittifinancial Crisis“. Drawing on the excellent Cory Doctorow’s “enshittification” theory, Zitron essentially asserts that the AI food chain is:

Profitable NVIDIA > Data centres financed by debt > Unprofitable model makers > Unprofitable startups

I.e. a house of cards, which will tumble should just one of the above elements fail. “None of these companies can afford their bills based on their actual cashflow”, asserts Zitron; “generative AI is not a functional industry, and once the money works that out, everything burns.”

Cases in point cited by Zitron: “neoclouds” like Coreweave or Nebius, which rent out GPU compute to companies like OpenAI, are doomed to fail, notably because OpenAI’s contract allows them to pay Coreweave one year later; or Zhipu, China’s equivalent of OpenAI, is currently running at a net loss of $334 million on just $27m in revenue, and its going public on the Hong Kong stock exchange has not improved its financial prospects.

All of which – I would highly recommend taking the time to read Zitron’s extra long article, it’s worth it – leads to the damning conclusion that AI is:

“Inconsistent, ugly, unreliable, expensive and environmentally ruinous, pissing off a large chunk of consumers and underwhelming most of the rest, other than those convinced they’re smart for using it or those who have resigned to giving up at the sight of a confidence game sold by a tech industry that stopped making products primarily focused on solving the problems of consumers or businesses some time ago.”

4. It would pave the way for more responsible AI

Zitron’s friend, the prolific and genial Doctorow, then stepped up on The Guardian with the simple-yet-effective message: “AI companies will fail. We can salvage something from the wreckage“. Whilst accepting that the – in his opinion, inevitable – bursting of the bubble will be ugly (cf. his quote in my intro), Doctorow encourages us to look at what will be left behind when it does.

We will have a bunch of coders who are really good at applied statistics. We will have a lot of cheap GPUs, which will be good news for, say, effects artists and climate scientists, who will be able to buy that critical hardware at pennies on the dollar. And we will have the open-source models that run on commodity hardware, AI tools that can do a lot of useful stuff, like transcribing audio and video; describing images; summarizing documents; and automating a lot of labor-intensive graphic editing… These will run on our laptops and phones, and open-source hackers will find ways to push them to do things their makers never dreamed of (…)

It’s the bubble that sucks, not these applications. The bubble doesn’t want cheap useful things. It wants expensive, “disruptive” things: big foundation models that lose billions of dollars every year.

When the AI-investment mania halts, most of those models are going to disappear, because it just won’t be economical to keep the datacenters running.

In other words, when the electricity and money runs out – and even Musk agrees the former will – what’s left will be everything that isn’t “big AI”: smaller, open source models, which can run in ‘traditional’ data centres, or directly on our computers or smartphones. With a tiny fraction of today’s AI impacts.

Useful AI, as we could call it. AI that makes our lives easier without ruining the planet.

A bit like it already does in China – whose approach to artificial intelligence even AI boomer Eric Schmidt prefers to that of the West – and where it even runs on solar energy.

One day…

Featured image by TANMAY GHOSH on Pexels.com

Nice article James – aligns with a lot of my thinking from a while back: https://bettertech.blog/2026/01/23/why-the-big-ai-bubble-could-burst-in-2026-and-why-this-might-be-a-good-thing/And more recently: https://blog.scottlogic.com/2025/11/12/balancing-ai-innovation-sustainability-hm-treasury-id25.html

LikeLike

Thanks Oliver, I’ll have a read (of the 2nd one; the first link is my article 😅)

LikeLike