As highlighted in our previous article, there is every reason to believe that the environmental impact of generative AI is set to increase significantly: sevenfold by 2030, according to the latest study by the GreenIT Association. The need for frugal AI therefore seems obvious.

But how can we assess these impacts on a daily basis, given the opacity of the main AI providers?

Even three years after the launch of ChatGPT, the data available on the real environmental impacts of AI remains so vague, and the lack of a universally imposed standard is so glaring, that we can only talk about assessment, or evaluation, as opposed to real measurement.

Does this mean we’re stuck? Not necessarily!

AI providers aren’t telling us everything

Free of any regulatory obligations, the main providers of generative AI models only disclose what they choose to about the impacts of their products.

The worst offender in this area is OpenAI, creator of ChatGPT, by far the most widely used generative AI service in the world today (800 million users per week)… and the most opaque.

Sam Altman, CEO of OpenAI, stated in a blog post on a completely different subject in June 2025 that the cost of a prompt on ChatGPT is 0.34Wh of energy and 0.00032176 litres of water. Shortly afterwards, Google stated – surprise, surprise! – that a typical Gemini prompt consumed less energy (0.24Wh) and water (0.00026L) than its main competitor.

Unlike OpenAI, whose figures were taken out of context, Google relied on seemingly scientific research, formalised in a very serious white paper. But in both cases, the lack of key data was glaring:

- What about the number of tokens per prompt? Without this, we don’t know what is being measured

- What about the total number of prompts per week? Without this information, we have no idea of the total impact (but if we consider the 2.5 billion prompts received by ChatGPT every day, this impact is clearly significant…)

- What about location-based emissions? Google relied on market-based emissions, which can be 3-4 times lower

- What about the energy mix/carbon intensity of the data centres where training and inference took place?

- What about the impacts of model training?

- What about the hardware impacts (for training and for the user)?

Frugal AI cannot be considered as such without answers to all these questions, amongst others.

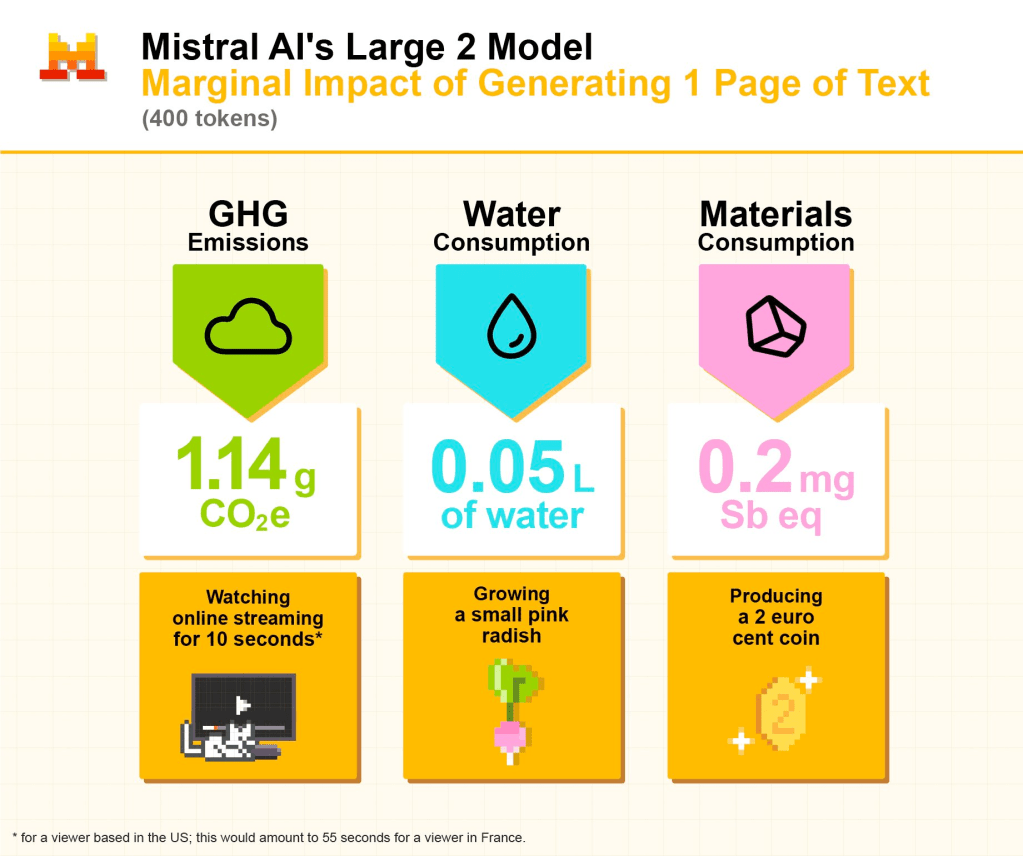

At around the same time, Mistral AI released the most comprehensive life cycle assessment (LCA) to date on the impacts of generative AI content creation. This analysis was validated by Resilio, Hubblo and ADEME, and complies with AFNOR’s Frugal AI standard.

Unlike the incomplete statements made by Mistral AI’s American competitors, this analysis had the advantage of specifying how many tokens were analysed, and of including hardware impacts by measuring the consumption of mineral resources needed to manufacture the GPUs and servers that make generative AI outputs possible.

However, the lack of data on one absolutely key factor – energy – is perplexing. And while the analysis complies with the 10 environmental impact criteria listed by AFNOR, six others, which are key to a complete PEF (product environmental footprint) analysis, are ignored. These include eutrophication and land use, to name but two.

Drilling down on the 16 PEF criteria

As you might guess, we’ve reached the limits of what the creators of generative AI models are willing to disclose. The future of frugal AI therefore depends, for now, on the following: initiatives by third parties, namely researchers, NGOs and other associations.

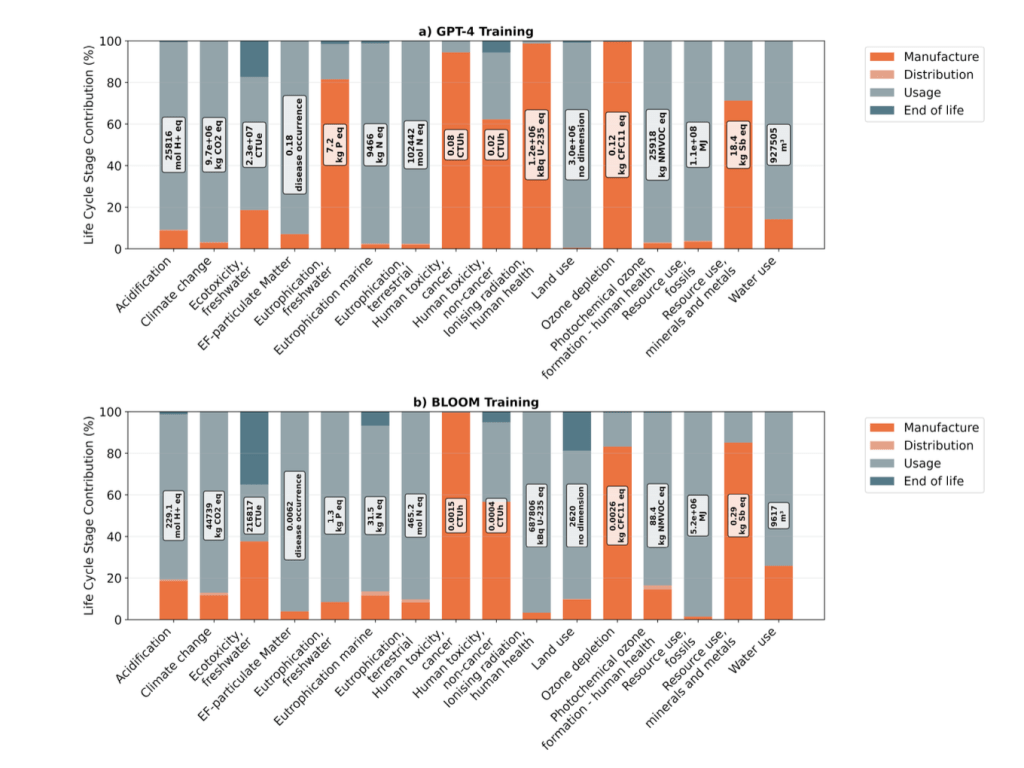

The first true LCA of AI was not that of Mistral AI, but rather that of a group of researchers led by Sophia Falk of the University of Bonn in Germany and David Ekchajzer of Hubblo. Together with others, including the indispensable Sasha Luccioni from Hugging Face, they collected NVIDIA A100 GPUs (the generation before the H100, the most widely used GPUs for AI today).

They then literally crushed them in order to analyse their physical components. Why? Because manufacturers, led by NVIDIA, do not do this. They simply publish ‘LCA’ reports that only detail the carbon impact of their GPUs, which is one PEF criterion out of 16.

These GPU powders were then analysed using the 16 PEF criteria, which yielded the above results, detailed in this white paper, released in December 2025. While the results are not always easy for the average person to understand, they confirm two key factors of frugal AI:

- Emissions/carbon footprint represent only part of the environmental impact of AI.

- There is an urgent need to be able to run more models on less hardware.

These findings were then confirmed by a Green IT Association study, this time based on an analysis of H100 GPUs, which confirmed that 69% of AI’s impact is not related to emissions. Namely:

- Global warming potential: 31%

- Depletion of abiotic resources (fossil fuels, metals, minerals): 21.4%

- Fine particle emissions: 18.5%

- Eutrophication: 18.3%.

Whence the three recommendations for frugal AI that conclude this study:

- Create an ‘AI sobriety’ plan to contain supply and control demand

- Create a Frugal AI Centre of Excellence

- Host AI in countries where electricity has the least impact (which remains a ‘quick win’ that does not solve the underlying problem).

What we do know

That said, there are plenty of options available for assessing the impact of everyday AI use.

First, ask your cloud provider! Even smaller providers, such as Scaleway or OVH, provide dashboards to monitor emissions, water consumption and (ideally) the hardware impacts of your cloud activity. The emissions from your AI activity should be detailed in these same dashboards. This is already the case with Google, for example, which details the emissions associated with the use of each of its cloud products, including AI.

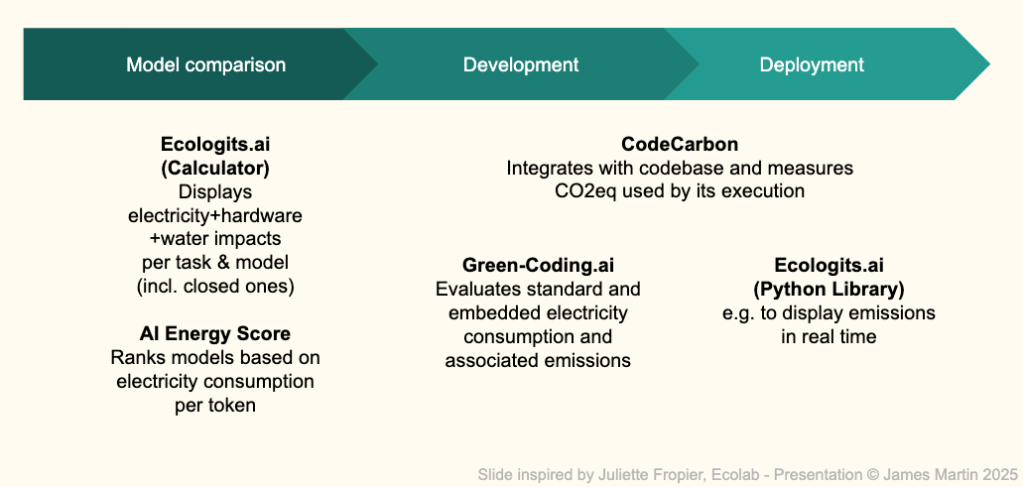

Next, at each stage of the design of an AI project, different tools can be used to assess the impacts:

(Slide from my GreenIT.fr “Frugal AI” training course)

Although all these tools are the result of unofficial initiatives, they are becoming increasingly sophisticated and are being used by more and more large companies.

Take EcoLogits, for example:

- Its calculator can estimate the impact of a particular AI model before it is chosen (simply multiply the impact of a query by the number of times it will be performed per day, and by the number of users, over the desired period; see Sonia Tabti, here)

- Its Python library can be integrated into the client’s code to provide real-time estimates. This is how, for example, the SNCF Group’s internal LLM client displays the emissions of each prompt submitted by its users. Not bad for a company with 290,000 employees!

Compar.IA, the French government’s excellent LLM model comparison tool, also relies on EcoLogits.

Of course, no tool is perfect: EcoLogits only evaluates text generation, whereas we know that image generation consumes significantly more resources.

It also suffers from the fact that the most widely used models – the latest GPT and Gemini – are closed, and therefore has to “guess” their impact by comparing them with open source models of equivalent size. The same applies to AI Energy Score, among others (although the latter has just added a very useful model ranking feature…).

The answer is as such to use a combination of evaluation tools, according to your needs. Also worth a look is SCI for AI, the artificial intelligence version of the Green Software Foundation’s ISO-validated Software Carbon Intensity standard.

Conclusion: Assessing the impacts of AI is possible!

Whatever the solution, we can no longer say ‘we cannot know’ when it comes to the impacts of AI.

Admittedly, precise measurement and an internationally imposed standard are still sorely lacking when it comes to frugal AI. But we can already:

- Know in advance whether a particular AI tool is likely to jeopardise a company’s (IT) emission reduction targets

- Set environmental limits that must not be exceeded by our AI systems

- Use assessment tools to:

- make users more aware of the impacts of AI

- include the level of frugality of a particular AI solution in IT purchasing policies (projects like these are already underway at Orange and La Poste Group, for example).

Together, we can do it!

Learn about frugal AI to act quickly and effectively

To find out more, you can:

- Wait for the next article in this series

- Sign up for the next session of the ‘Frugal AI’ training course: all you need to lead your company towards more frugal AI…

Featured image by Steve Johnson: https://www.pexels.com/photo/29525870/