“We can’t quantify AI’s impact, we don’t have enough precise data.” Oh really? It’s largely in reaction to assertions like this that I first set about compiling the above stats, late 2024. Because, whilst a lot of figures floating around are questionable – most famously the assertion that 1 request to ChatGPT uses as much energy as 10 Google searches (this depends on too many variables) – the macro trends are undeniable. E.g.:

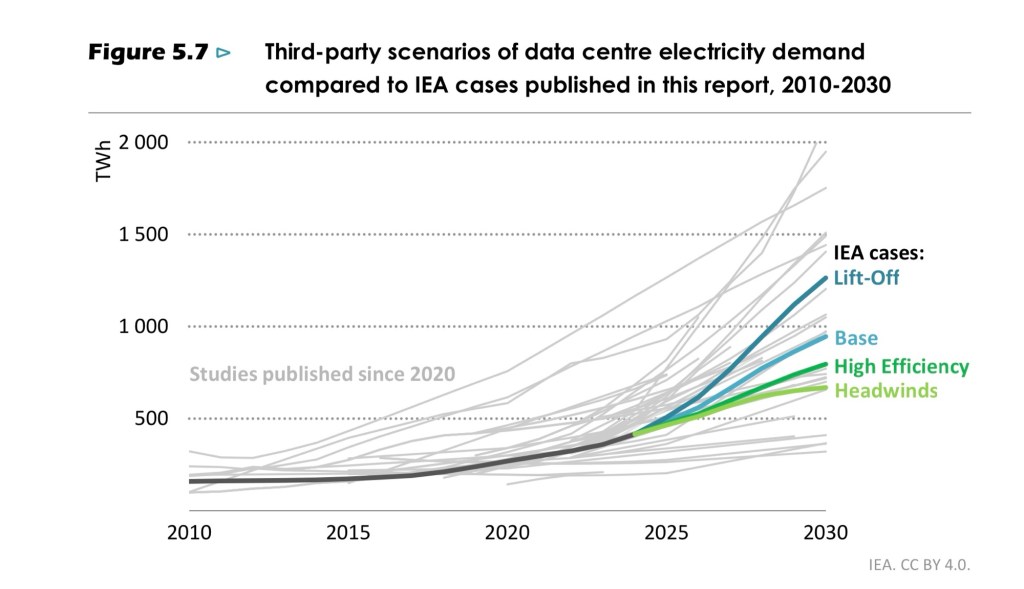

- Energy consumption of data centres in the US and Europe is set to double, or even triple, by the end of this decade, due to AI

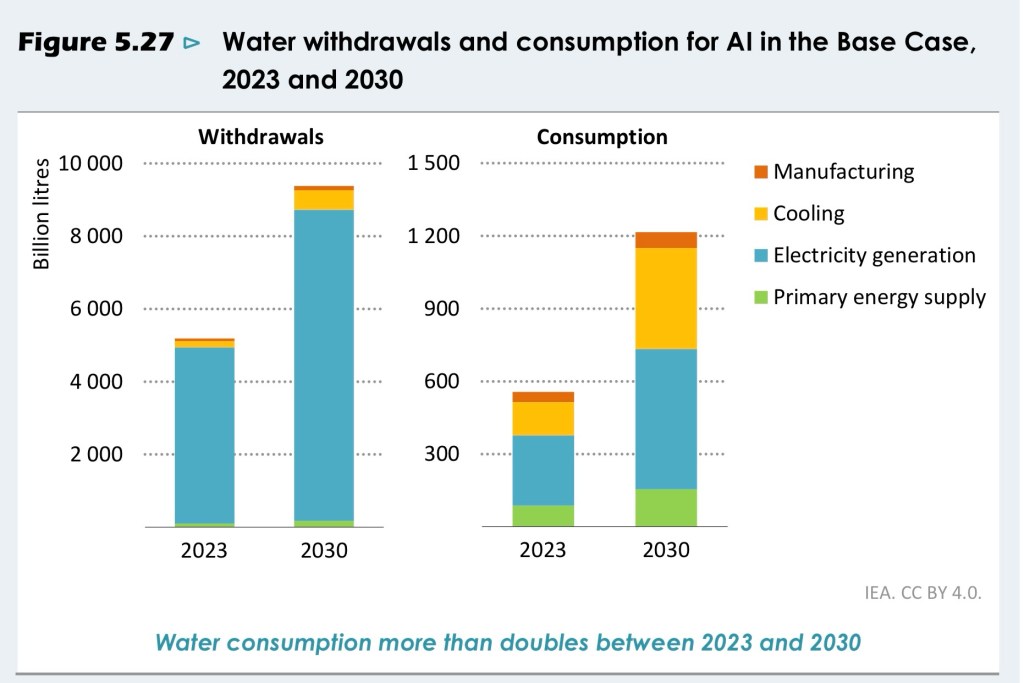

- Water consumption by data centres could also up to quadruple for the same reasons

- As nuclear power stations can’t be built quickly enough to cover this demand surge, fossil fuel usage will be extended, slowing down the energy transition.

All of the above was already true before Trump started dismantling US climate policy; before the EU said it would roll back CSRD sustainability reporting demands; and before the AI Action Summit announcement of €30-50bn investments in AI data centres in France, which would double the country’s data centre power capacity.

What about trying to make the models more frugal, so less data centres are needed in the first place?

As the presentation also points out, smaller models are just part of the solutions we can adopt to limit the surging impact of AI. And these solutions can – and must – be applied across all three pillars of Green IT, namely data centres, hardware and software. Cherry on the cake: many of the solutions are great for data sovereignty too.

The “throw ever more computing power at it” approach personified by OpenAI is not only totally unsustainable – financially as well as ecologically speaking – it’s also not inevitable. So let’s get to work on alternatives!

PS: you can also watch this presentation in just 9 minutes, below 🙂

Update April 2025: world-leading energy authority the IEA released a 300-page report on AI’s energy consequences… but dropped the ball on the key question: is AI a net positive or negative for the environment? As long as we can’t say, I’m leaning more towards “negative”. Cf. My LinkedIn post on the topic:

Make no mistake, the International Energy Agency (IEA)’s latest report into AI and energy (https://lnkd.in/exbYT2-m) is a landmark document. 300 pages of concrete examples of how, despite the fact that AI’s energy demand is surging, it can be used to optimise energy efficiency in countless ways.

But on the crucial & recurring question of whether AI is a net positive for the planet, it falls short.

1. It claims recent estimations of energy demand from data centres are too divergent (above), because tech companies’ reporting is too opaque (correct) but then settles for a median doubling of energy demand by 2030. When the majority of said reports do actually agree – cf. above slides – on a tripling by 2028.

2. Similarly on water, it opts for a conservative doubling of DC consumption by 2030 (above), when other reports predict a quadrupling… by 2028. It also misses the main reason for this: big tech loves cooling towers, which can use at least 25 litres of water per second.

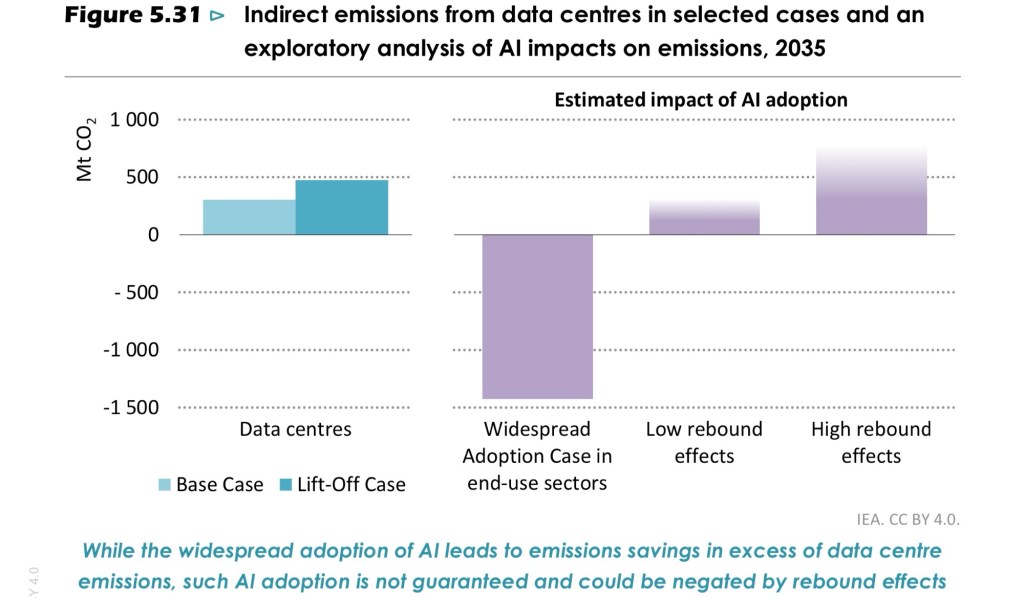

3. It notes several times that data centres (ergo the cloud) is one of the only sectors in the world whose emissions are actually growing right now (above). Good point. I wonder why?

4. But then drops the ball when it comes to the final “net positive?” verdict. The IEA thinks data centre emissions will peak and decline after 2030; and that AI will enable a 4% reduction in the energy sector’s emissions by 2035. Then fudges the key determining factor here – rebound, or indirect effects – saying we don’t have enough data on them (above).

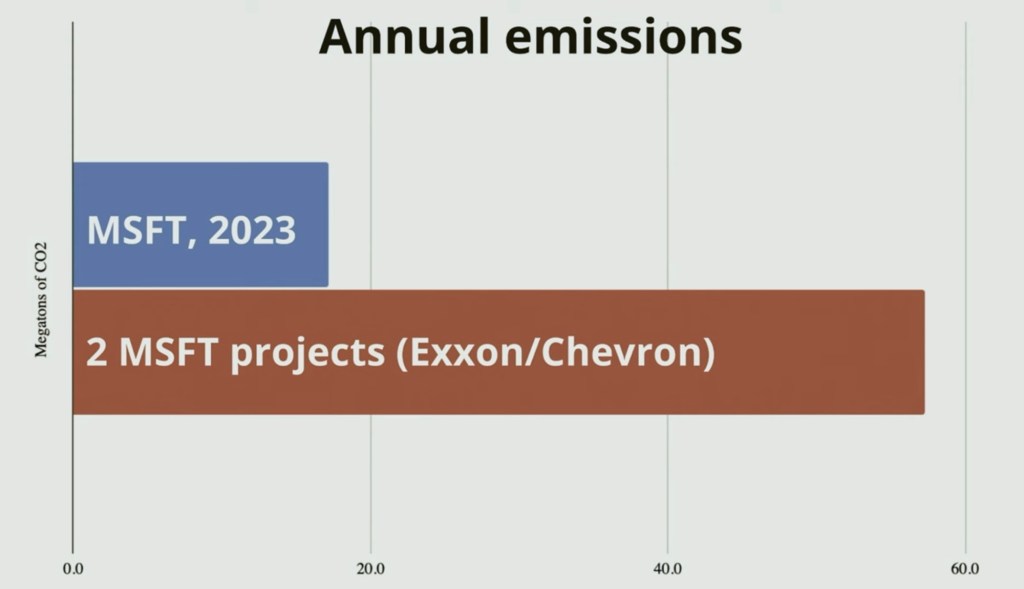

5. We may not have enough, but we do have some. Take former Microsoft AI engineer Will Alpine’s assertion that emissions enabled by Microsoft AI products for just two projects for Chevron and ExxonMobil are 3-4x greater than M$’s total emissions. That’s quite a rebound (the above image is from Will’s last keynote, here).

6. The report makes little mention of the fact that GPUs for AI are consuming more and more energy. NVIDIA’s 2027 generation chips will consume 5 times more energy per rack than the current one, for e.g., and could require 90 litres of water per minute to cool (via Mark Butcher).

But it’s Elon who’s doing possibly the best job in the world right now at illustrating the environmental and social harms of AI, direct and indirect. The Guardian has just revealed that the Memphis data centre housing xAI’s Grok supercomputer is illegally powered by 35 methane gas-burning generators, not 15 as initially thought. Musk is taking advantage of a legal loophole and pretending these generators are temporary. Not only is methane one of the most potent greenhouse gases. These generators emit pollutants into a neighbourhood already suffering from above-average levels of cancer and asthma. And the DC consumes 5 million litres of water per day, with local authorities’ benediction.

AI can indeed be for good, if it’s deployed in a frugal and responsible way. But as long as the world’s richest people are allowed to do the opposite, how can anyone claim AI is a net positive for the planet right now?